Linearly decoded VAE#

This notebook shows how to use the ‘linearly decoded VAE’ model which explicitly links latent variables of cells to genes.

The scVI model learns low-dimensional latent representations of cells which get mapped to parameters of probability distributions which can generate counts consistent to what is observed from data. In the standard version of scVI these parameters for each gene and cell arise from applying neural networks to the latent variables. Neural networks are flexible and can represent non-linearities in the data. This comes at a price, there is no direct link between a latent variable dimension and any potential set of genes which would covary across it.

The LDVAE model replaces the neural networks with linear functions. Now a higher value along a latent dimension will directly correspond to higher expression of the genes with high weights assigned to that dimension.

This leads to a generative model comparable to probabilistic PCA or factor analysis, but generates counts rather than real numbers. Using the framework of scVI also allows variational inference which scales to very large datasets and can make use of GPUs for additional speed.

This notebook demonstrates how to fit an LDVAE model to scRNA-seq data, plot the latent variables, and interpret which genes are linked to latent variables.

As an example, we use the PBMC 10K from 10x Genomics.

Note

Running the following cell will install tutorial dependencies on Google Colab only. It will have no effect on environments other than Google Colab.

!pip install --quiet scvi-colab

from scvi_colab import install

install()

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

import os

import tempfile

import matplotlib.pyplot as plt

import scanpy as sc

import scvi

import seaborn as sns

import torch

scvi.settings.seed = 0

print("Last run with scvi-tools version:", scvi.__version__)

Last run with scvi-tools version: 1.1.0

Note

You can modify save_dir below to change where the data files for this tutorial are saved.

sc.set_figure_params(figsize=(6, 6), frameon=False)

sns.set_theme()

torch.set_float32_matmul_precision("high")

save_dir = tempfile.TemporaryDirectory()

%config InlineBackend.print_figure_kwargs={"facecolor": "w"}

%config InlineBackend.figure_format="retina"

Initialization#

Load data and select the top 1000 variable genes with seurat_v3 method

adata_path = os.path.join(save_dir.name, "pbmc_10k_protein_v3.h5ad")

adata = sc.read(

adata_path,

backup_url="https://github.com/YosefLab/scVI-data/raw/master/pbmc_10k_protein_v3.h5ad?raw=true",

)

adata

AnnData object with n_obs × n_vars = 6855 × 16727

obs: 'n_genes', 'percent_mito', 'n_counts'

var: 'n_cells', 'highly_variable', 'encode', 'hvg_encode'

uns: 'protein_names'

obsm: 'protein_expression'

adata.layers["counts"] = adata.X.copy() # preserve counts

sc.pp.normalize_total(adata, target_sum=10e4)

sc.pp.log1p(adata)

adata.raw = adata # freeze the state in `.raw`

sc.pp.highly_variable_genes(

adata, flavor="seurat_v3", layer="counts", n_top_genes=1000, subset=True

)

Create and fit LDVAE model#

First subsample 1,000 genes from the original data.

Then we initialize an LinearSCVI model. Here we set the latent space to have 10 dimensions.

scvi.model.LinearSCVI.setup_anndata(adata, layer="counts")

model = scvi.model.LinearSCVI(adata, n_latent=10)

model.train(max_epochs=250, plan_kwargs={"lr": 5e-3}, check_val_every_n_epoch=10)

Epoch 250/250: 100%|██████████| 250/250 [01:03<00:00, 3.94it/s, v_num=1, train_loss_step=348, train_loss_epoch=375]

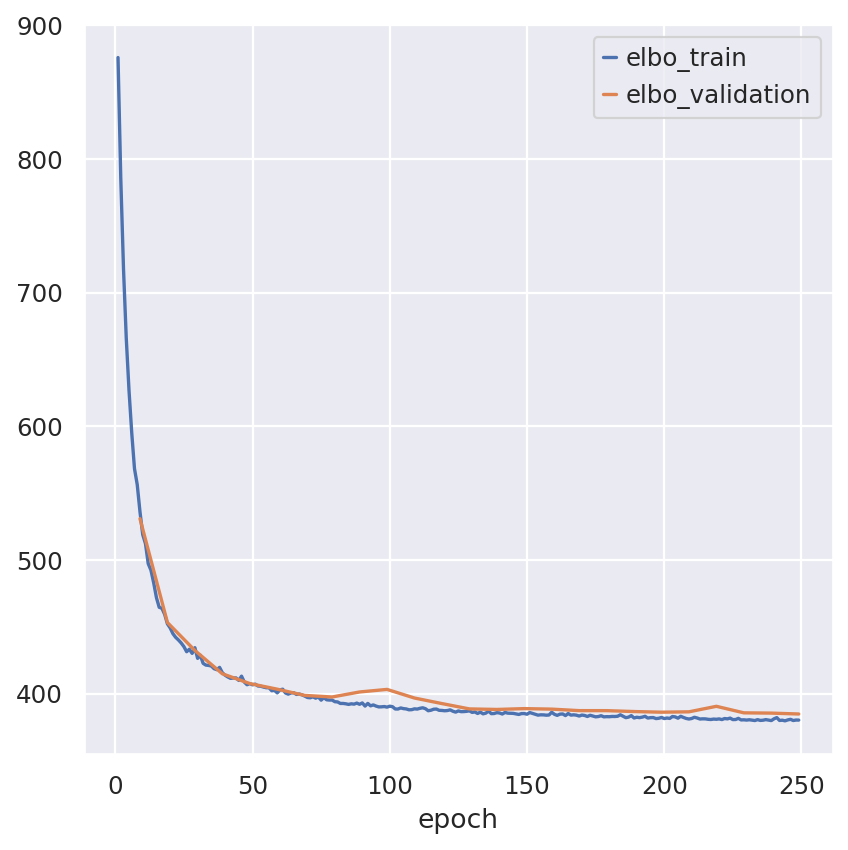

Inspecting the convergence

train_elbo = model.history["elbo_train"][1:]

test_elbo = model.history["elbo_validation"]

ax = train_elbo.plot()

test_elbo.plot(ax=ax)

<Axes: xlabel='epoch'>

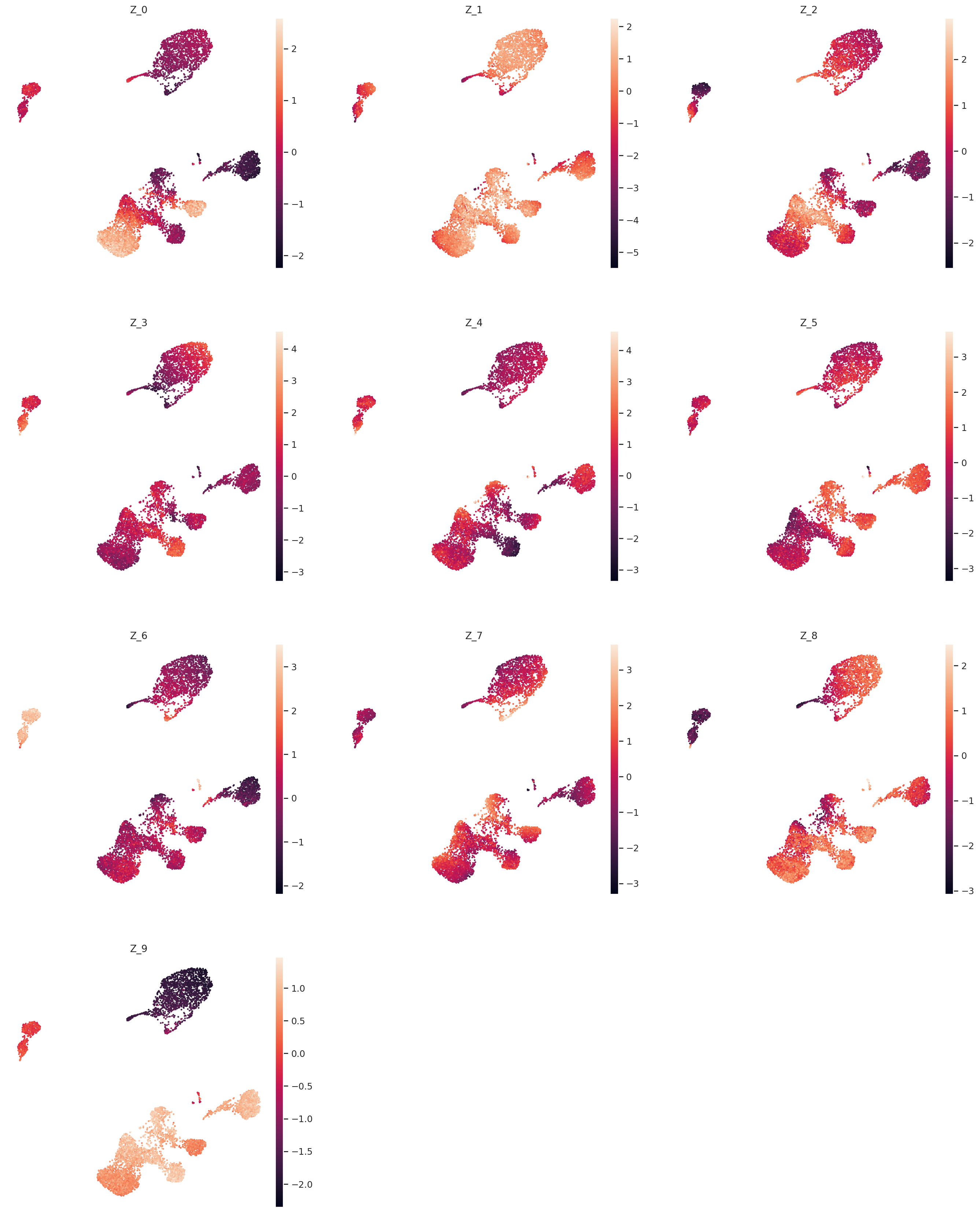

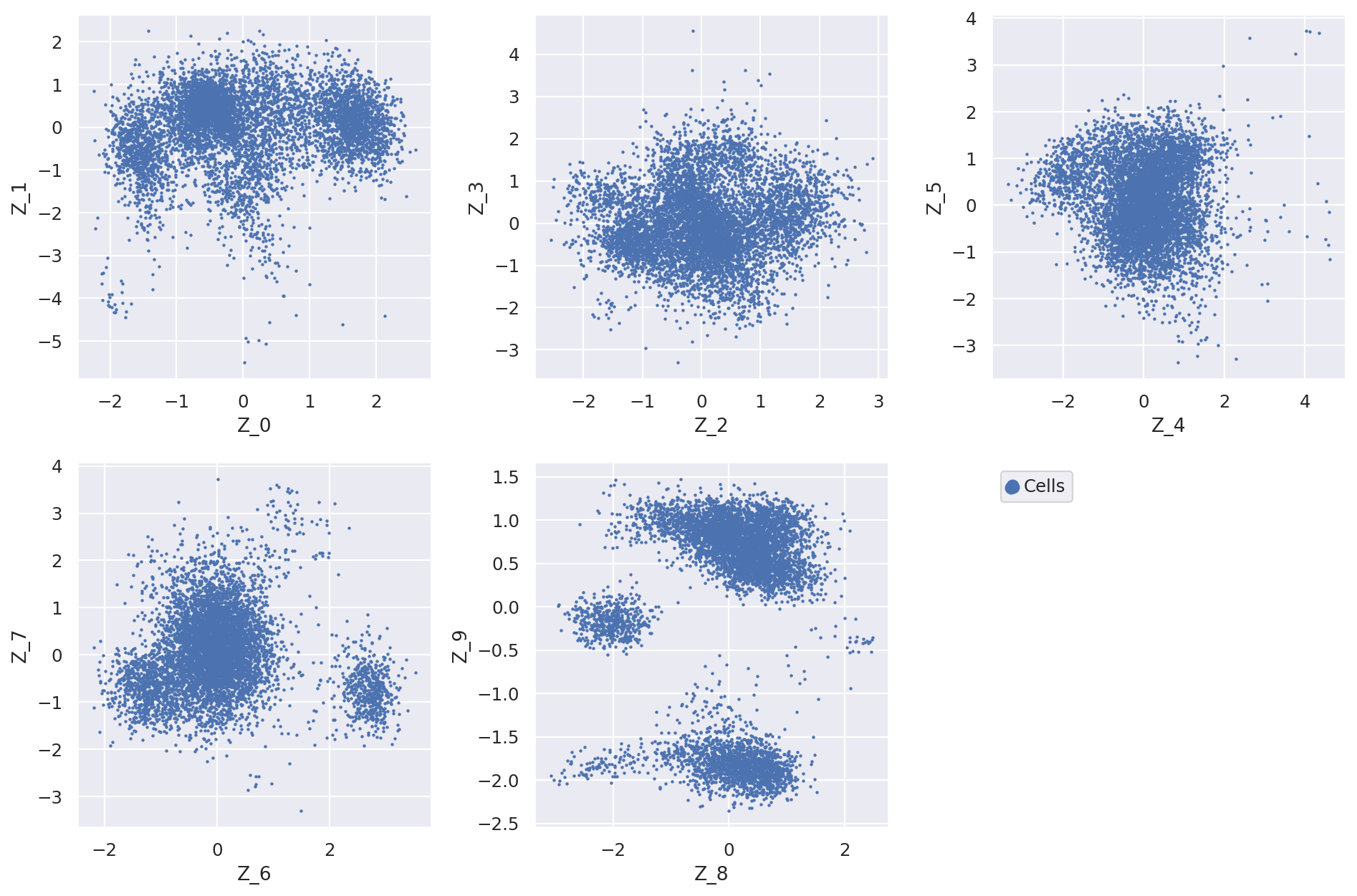

Extract and plot latent dimensions for cells#

From the fitted model we extract the (mean) values for the latent dimensions. We store the values in the AnnData object for convenience.

Z_hat = model.get_latent_representation()

for i, z in enumerate(Z_hat.T):

adata.obs[f"Z_{i}"] = z

Now we can plot the latent dimension coordinates for each cell. A quick (albeit not complete) way to view these is to make a series of 2D scatter plots that cover all the dimensions. Since we are representing the cells by 10 dimensions, this leads to 5 scatter plots.

fig = plt.figure(figsize=(12, 8))

for f in range(0, 9, 2):

plt.subplot(2, 3, int(f / 2) + 1)

plt.scatter(

adata.obs[f"Z_{f}"], adata.obs[f"Z_{f + 1}"], marker=".", s=4, label="Cells"

)

plt.xlabel(f"Z_{f}")

plt.ylabel(f"Z_{f + 1}")

plt.subplot(2, 3, 6)

plt.scatter(

adata.obs[f"Z_{f}"], adata.obs[f"Z_{f + 1}"], marker=".", label="Cells", s=4

)

plt.scatter(adata.obs[f"Z_{f}"], adata.obs[f"Z_{f + 1}"], c="w", label=None)

plt.gca().set_frame_on(False)

plt.gca().axis("off")

lgd = plt.legend(scatterpoints=3, loc="upper left")

for handle in lgd.legendHandles:

handle.set_sizes([200])

plt.tight_layout()

The question now is how does the latent dimensions link to genes?

For a given cell x, the expression of the gene g is proportional to x_g = w_(1, g) * z_1 + … + w_(10, g) * z_10. Moving from low values to high values in z_1 will mostly affect expression of genes with large w_(1, :) weights. We can extract these weights from the LDVAE model, and identify which genes have high weights for each latent dimension.

loadings = model.get_loadings()

loadings.head()

| Z_0 | Z_1 | Z_2 | Z_3 | Z_4 | Z_5 | Z_6 | Z_7 | Z_8 | Z_9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| index | ||||||||||

| AL645608.8 | 0.756368 | -0.100683 | 0.252074 | 0.032404 | -0.320864 | -0.224881 | -0.577871 | -0.506318 | -0.081928 | -0.094992 |

| HES4 | 0.830323 | -0.164451 | 0.253209 | -0.442601 | -0.400809 | 0.151515 | -0.316611 | -0.217516 | -0.282952 | -0.228449 |

| ISG15 | 0.446458 | 0.214321 | 0.270536 | -0.326161 | 0.047629 | -0.221070 | -0.220856 | -0.124097 | 0.036333 | 0.647635 |

| TNFRSF18 | -0.302383 | 0.107218 | 1.281761 | -0.547311 | -0.042539 | -0.420978 | 0.295821 | -0.471220 | 0.176347 | 2.154885 |

| TNFRSF4 | 0.290854 | 0.373043 | 1.157335 | -0.623632 | 0.037839 | -0.663865 | 0.086800 | -0.213453 | 0.248516 | 1.954238 |

For every latent variable Z, we extract the genes with largest magnitude, and separate genes with large negative values from genes with large positive values. We print out the top 5 genes in each direction for each latent variable.

print(

"Top loadings by magnitude\n---------------------------------------------------------------------------------------"

)

for clmn_ in loadings:

loading_ = loadings[clmn_].sort_values()

fstr = clmn_ + ":\t"

fstr += "\t".join([f"{i}, {loading_[i]:.2}" for i in loading_.head(5).index])

fstr += "\n\t...\n\t"

fstr += "\t".join([f"{i}, {loading_[i]:.2}" for i in loading_.tail(5).index])

print(

fstr

+ "\n---------------------------------------------------------------------------------------\n"

)

Top loadings by magnitude

---------------------------------------------------------------------------------------

Z_0: GNLY, -0.95 LMNA, -0.87 CES1, -0.85 CCL4L2, -0.84 LINC00996, -0.84

...

NELL2, 1.7 LEF1, 1.8 TSHZ2, 1.9 CCR7, 2.1 LRRN3, 2.4

---------------------------------------------------------------------------------------

Z_1: CDC20, -0.73 MYBL2, -0.71 PLD4, -0.7 TYMS, -0.7 IGLL5, -0.68

...

NRG1, 1.4 FOXP3, 1.4 ALDH1A1, 1.4 PMCH, 1.4 ARG1, 1.5

---------------------------------------------------------------------------------------

Z_2: HBA1, -1.1 CD160, -0.99 TCL1B, -0.95 TCL1A, -0.88 AL139020.1, -0.83

...

C19ORF33, 1.2 TNFRSF18, 1.3 COL5A3, 1.4 LMNA, 1.8 IFI27, 1.8

---------------------------------------------------------------------------------------

Z_3: FPR3, -1.7 C1QA, -1.6 TRDC, -1.6 C1QB, -1.4 XCL1, -1.4

...

SSPN, 0.91 IGLV6-57, 0.97 IGLV1-51, 0.99 COCH, 1.0 SHISA8, 1.0

---------------------------------------------------------------------------------------

Z_4: S100B, -1.4 SPTSSB, -1.1 EGR3, -1.1 SLC4A10, -1.0 AC004817.3, -0.93

...

FAM111B, 1.0 CLU, 1.1 SELP, 1.1 TRIM58, 1.2 FOXP3, 1.2

---------------------------------------------------------------------------------------

Z_5: CCR10, -1.2 CERS3, -1.1 EGR3, -1.0 CLEC9A, -0.99 TSHZ2, -0.95

...

GZMK, 1.2 LINC02446, 1.4 CD8B, 1.5 KLRC1, 1.8 KLRC2, 2.0

---------------------------------------------------------------------------------------

Z_6: FCGR3A, -1.0 AC007240.1, -0.98 CES1, -0.86 HBA1, -0.81 LPL, -0.8

...

CD24, 1.6 VPREB3, 1.6 PPP1R14A, 1.7 MME, 1.7 FAM30A, 1.7

---------------------------------------------------------------------------------------

Z_7: CXCL10, -1.7 GP9, -1.2 PF4, -1.1 TTC36, -1.0 MPIG6B, -1.0

...

CD8B, 1.0 CD8A, 1.0 CD1E, 1.1 ENHO, 1.2 FCER1A, 1.5

---------------------------------------------------------------------------------------

Z_8: ARG1, -1.2 SHISA8, -1.1 IGHE, -1.1 AC004585.1, -0.99 HLA-DQA1, -0.94

...

CLEC4C, 1.1 MAP1A, 1.2 ZNF683, 1.2 C7ORF57, 1.2 LCNL1, 1.2

---------------------------------------------------------------------------------------

Z_9: S100A8, -1.7 S100A9, -1.7 LYZ, -1.7 CYP1B1, -1.6 S100A12, -1.6

...

ZNF683, 2.4 TRGC1, 2.5 TRDC, 2.7 TRGC2, 2.7 GZMK, 2.7

---------------------------------------------------------------------------------------

It is important to keep in mind that unlike traditional PCA, these latent variables are not ordered. Z_0 does not necessarily explain more variance than Z_1.

These top genes can be interpreted as following most of the structural variation in the data.

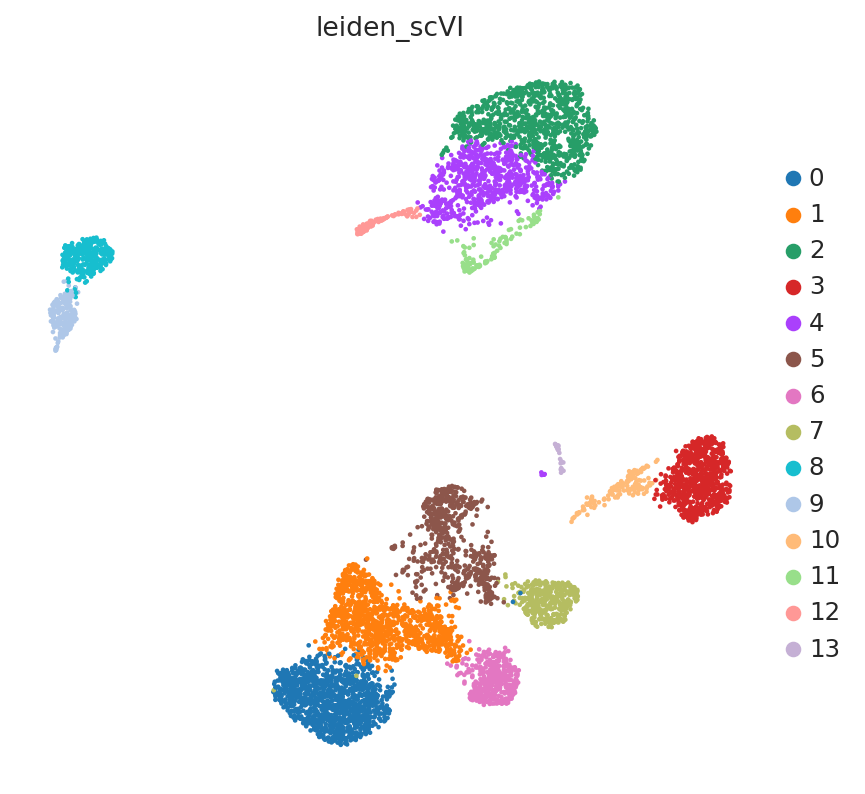

The LinearSCVI model further supports the same scVI functionality as the SCVI model, so all posterior methods work the same. Here we show how to use scanpy to visualize the latent space.

SCVI_LATENT_KEY = "X_scVI"

SCVI_CLUSTERS_KEY = "leiden_scVI"

adata.obsm[SCVI_LATENT_KEY] = Z_hat

sc.pp.neighbors(adata, use_rep=SCVI_LATENT_KEY, n_neighbors=20)

sc.tl.umap(adata, min_dist=0.3)

sc.tl.leiden(adata, key_added=SCVI_CLUSTERS_KEY, resolution=0.8)

sc.pl.umap(adata, color=[SCVI_CLUSTERS_KEY])

zs = [f"Z_{i}" for i in range(model.n_latent)]

sc.pl.umap(adata, color=zs, ncols=3)